Anthropic Ethical Quest – Beat the Drum for Constitutional AI

Today, as AI development surges forward, concerns about privacy and safety have become increasingly pronounced.

Anthropic, founded by former OpenAI visionaries, is at the forefront of addressing these concerns.

Drawing from a diverse talent pool encompassing machine learning, physics, policy, and product development, Anthropic has set out on a transformative journey.

Their mission? To not only pioneer cutting-edge AI advancements but also to ensure that these developments are underpinned by safety and ethical considerations.

Anthropic: Forging a Safer Path in AI Evolution

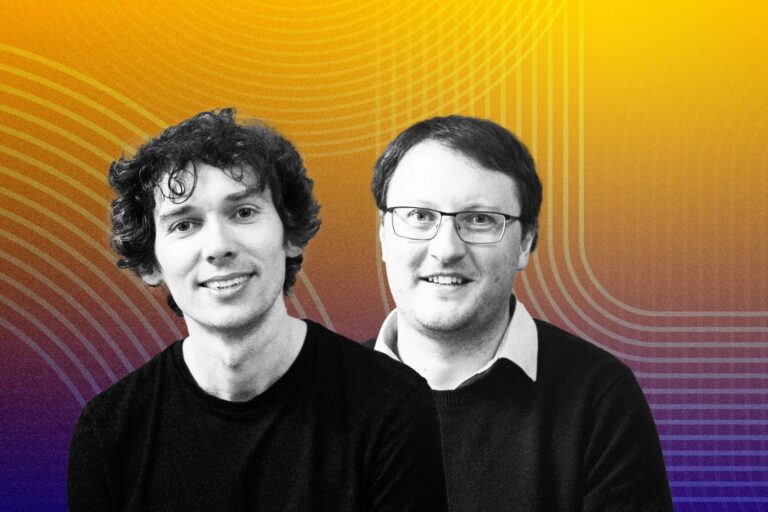

Founded in 2021 by former senior members of OpenAI, most notably the dynamic sibling duo, Daniela Amodei and Dario Amodei, who previously held the prestigious role of Vice President of Research at OpenAI.

They left his position at OpenAI with the aim of constructing a model that could be more reliable and trustworthy.

According to Dario: “There was a group of us within OpenAI, that in the wake of making GPT-2 and GPT-3, had a kind of very strong focus belief in two things. I think even more so than most people there. One was the idea that if you pour more compute into these models, they’ll get better and better and that there’s almost no end to this. I think this is much more widely accepted now.”

“But I think we were among the first believers in it and the second was the idea that you needed something in addition to just scaling the models up, which is alignment or safety. You don’t tell the models what their values are just by pouring more compute into them. And so, there were a set of people who believed in those two ideas. We really trusted each other and wanted to work together. And so, we went off and started our own company with that idea in mind.” – he added.

Dario and Daniela, aged 40 and 36, respectively, bring their forward-thinking approach to AI alignment, one that they believe to be safer and more responsible than those pursued by other cutting-edge AI enterprises.

Besided, notable team members encompass research lead Sam McCandlish, lead GPT-3 engineer Tom Brown, research scientist Amanda Askell, policy director Jack Clark, and several technical staffers.

Amid growing concerns regarding the accuracy, bias, and potential risks associated with generative AI programs, Anthropic, an Alphabet-backed AI startup, has taken a proactive step by revealing the value guidelines it has employed to train its generative AI system, Claude.

Anthropic’s distinctive journey in the AI landscape has been marked by groundbreaking research in “mechanistic interpretability.” This research strives to provide developers with a means to delve beneath the surface of AI systems, offering a window into the inner workings of these systems beyond their text outputs, which often fail to reveal the true mechanics at play.

Anthropic’s approach involves training Claude using what they call “Constitutional AI.” This system is based on a predefined set of principles that guide the AI’s decision-making process, aimed at avoiding the generation of toxic or discriminatory outputs. These principles are designed to prevent the AI from assisting humans in illegal or unethical activities.

The company highlighted these initiatives in a blog post, underlining their commitment to creating an AI system that is not only helpful but also characterized by honesty and harmlessness.

This transparency and commitment to ethical AI practices have been well-received by experts in the field. Avivah Litan, a distinguished analyst at Gartner Research, praised Anthropic’s decision to openly share the principles governing Claude’s training.

Consequently, Constitutional AI empowers developers to codify a set of values into an AI, avoiding the implicit and imperfect setting of values that often occurs through other methods.

Dario emphasizes the importance of this distinction: “I think it’s useful to separate out the technical problem of: the model is trying to comply with the constitution and might or might not do a perfect job of it, [from] the more values debate of: Is the right thing in the constitution?”

This separation, previously lacking in discussions, has the potential to drive more productive conversations about the inner workings and ethical dimensions of AI systems.

The Framework of Constitutional AI: Ensuring Ethical and Responsible AI Behavior

Let’s delve deeper into the concept of “Constitutional AI” as applied by Anthropic in guiding the behavior of their AI model, Claude.

“Constitutional AI” serves as the foundational framework for defining the values and principles that dictate how AI systems like Claude should interact with users.

In the words of Jared Kaplan, Anthropic’s co-founder, this constitution represents a commitment to transparency: “We are sharing Claude’s current constitution in the spirit of transparency.” It outlines a set of core values, including being “helpful, harmless, and honest,” and provides specific guidelines on handling sensitive topics, respecting user privacy, and avoiding any engagement in illegal activities.

This constitution is a living document, open to refinement, reflecting the company’s dedication to adapt to changing ethical concerns.

Claude, Anthropic’s flagship product, stands out for its versatile capabilities, including generating poems, stories, code, and more. It also offers support in improving and optimizing user-generated content.

The constitution is instrumental in ensuring that Claude remains a reliable and ethical AI system by setting the boundaries and guidelines for its behavior, a point emphasized by Kaplan when he mentioned, “all uses of our model need to fall within our Acceptable Use Policy.”

In light of the escalating concerns regarding the potentially devastating impact of advanced AI models, Anthropic has introduced a groundbreaking policy known as the Responsible Scaling Policy (RSP).

The RSP, as explained by Sam McCandlish, Anthropic’s co-founder, is designed to tackle “catastrophic risks” head-on, emphasizing situations where AI models could directly cause large-scale harm.

To achieve this, the policy introduces AI Safety Levels (ASLs) that help assess and manage the potential risk associated with different AI systems, categorizing them from low to high risk (ASL-0 to ASL-3).

McCandlish acknowledges that the policy is adaptable and evolving, acknowledging the evolving nature of AI technology: “We can never be totally sure we are catching everything, but will certainly aim to.”

Anthropic’s Claude: Elevating Language Models to New Heights

Claude, developed by Anthropic, is at the forefront of generative AI capabilities, having recently expanded its processing capacity from 9,000 tokens to an impressive 100,000 tokens, equating to about 75,000 words.

This significant enhancement opens doors to a myriad of potential applications. Claude can now swiftly process vast amounts of text, akin to the length of an entire novel.

To put this into perspective, it can read and analyze classics like Ernest Hemingway’s “A Farewell to Arms,” Mary Shelley’s “Frankenstein,” or Mark Twain’s “The Adventures of Tom Sawyer” in under a minute. This capacity makes Claude invaluable for rapid information extraction and synthesis.

Generative AI systems, including Claude, operate within token constraints, with tokens representing word fragments.

Claude’s remarkable expansion to 100,000 tokens surpasses the capabilities of most generative AI models, such as OpenAI’s GPT-4, which typically handles around 8,000 tokens, or extended versions that manage up to 32,000 tokens. By comparison, OpenAI’s publicly available ChatGPT chatbot is limited to around 4,000 tokens.

What truly distinguishes Claude is its innovative approach, often referred to as “constitutional AI”, as mentioned above. Anthropic has painstakingly designed Claude to prevent generating outputs that are sexist, racist, toxic, or supportive of illegal or unethical activities.

By implementing a set of guiding principles, Claude offers a “principle-based” approach to align AI behavior with human intentions, significantly enhancing its ethical and responsible use.

Early user feedback indicates that Claude excels in providing responses that are less harmful and manipulative, making it a more approachable conversational partner.

However, there are some notable limitations to consider. Claude’s performance in mathematical and grammatical tasks falls behind its rival, ChatGPT. Additionally, the chatbot sometimes invents names and terms that do not exist, occasionally leading to unexpected responses.

Despite these limitations, the backing of industry giants like Google underscores Anthropic’s potential to become a major player in the AI industry. Anthropic has adopted a focused approach, targeting specific use cases rather than pursuing a broad direct-to-consumer strategy, with the goal of delivering a superior, more tailored product.

Claude’s ability to process vast amounts of text and its unwavering commitment to ethical AI principles make it a notable advancement in the field of generative AI.

Dario Amodei, CEO of Anthropic, highlights that language serves as an engaging laboratory for AI research, encapsulating an abundance of information from diverse textual sources like websites, books, and articles. It reflects our culture, values, and collective knowledge. Tom Brown, co-founder of Anthropic, eloquently notes that “We encode all of our culture as language.”

Comparing language models extends beyond benchmarking computing speed, but Anthropic’s Claude has garnered praise. Claude 2, in particular, is admired for its “pleasant” AI personality and its proficiency in working with documents, as endorsed by AI advocate and Wharton professor, Ethan Mollick. AI research scientist Jim Fan at NVIDIA acknowledges Claude’s progress, stating that it is “catching up fast,” although it has yet to reach GPT-4’s level.

Anthropic Secures $450 Million in Series C Funding with Key Investments from Tech Giants

Anthropic has secured a substantial $450 million in a Series C funding round, led by Spark Capital. This round’s valuation hasn’t been officially disclosed, but prior reports suggested it could exceed $4.1 billion, indicating strong investor confidence in Anthropic’s AI technology.

Notably, this funding round includes participation from tech giants such as Google (Anthropic’s preferred cloud provider), Salesforce (via its Salesforce Ventures wing), and Zoom (via Zoom Ventures), alongside other undisclosed venture capital parties.

“We are thrilled that these leading investors and technology companies are supporting Anthropic’s mission: AI research and products that put safety at the frontier,” said CEO Dario Amodei. “The systems we are building are being designed to provide reliable AI services that can positively impact businesses and consumers now and in the future.”

Zoom recently announced a collaboration with Anthropic to develop customer-facing AI products that emphasize reliability, productivity, and safety, following a similar partnership with Google. Anthropic has disclosed that it serves more than a dozen customers across various industries, including healthcare, HR, and education.

It’s worth noting that the Series C funding coincided with Spark Capital’s appointment of Fraser Kelton, former head of product at OpenAI, as a venture partner. Spark Capital was an early investor in Anthropic and has intensified its efforts to identify early-stage AI startups, particularly in the thriving field of generative AI.

Yasmin Razavi, a general partner at Spark Capital who joined Anthropic’s board of directors in connection with the Series C, expressed enthusiasm about the partnership and Anthropic’s mission to build reliable and ethical AI systems.

She highlighted the positive response to Anthropic’s products and research, underscoring the broader potential of AI to usher in a new era of prosperity in our societies.

With the $450 million injection from the Series C, Anthropic’s total funding now amounts to a substantial $1.45 billion. While this firmly places Anthropic among the best-funded startups in AI, it remains second only to OpenAI, which has raised over $11.3 billion to date, according to Crunchbase. Competing AI startup Inflection AI, focused on an AI-powered personal assistant, has secured $225 million in funding, while another rival, Adept, has raised approximately $415 million.

Amazon’s investment in Anthropic is part of its effort to keep pace with its tech industry rivals. Microsoft and Google have each committed substantial investments in AI research, with Amazon now seeking to bolster its position in this rapidly evolving field.

Anthropic, considered one of the most promising AI startups, will tap into Amazon’s resources, including its data centers, cloud-computing platform, and AI chips.

This investment underscores the intense competition to be at the forefront of AI, a technology that has captured the public’s imagination and has the potential to revolutionize how people work and live.

As part of this race, major tech companies are partnering with emerging AI startups, offering them both computing power and financial support to facilitate the development of new AI models and applications.

Notably, Google has also invested in Anthropic, while Microsoft has committed a substantial $13 billion to OpenAI.

Amazon’s investment of up to $4 billion in Anthropic would grant the company a minority stake in the startup, as confirmed by Amazon.

The Future of AI and Language Models: Anthropic’s Ambitions and Insights

Anthropic’s aspirations are nothing short of monumental, as revealed in a leaked pitch deck outlining their plan to raise up to $5 billion in the next two years.

Their audacious goal is to construct highly sophisticated AI models capable of automating substantial segments of the economy. The recent substantial investment from Amazon, as discussed earlier, marks a significant stride toward realizing this formidable objective.

While Anthropic’s grand commercial ambitions may initially seem at odds with their resolute commitment to being a “safety-first” company, they argue that the advancement of AI safety is intrinsically linked to pushing the boundaries of AI capabilities.

David Kaplan, a former theoretical physicist, underscores the value of real-world models as an external source of truth, emphasizing that it’s vital to move beyond theoretical discussions. Building such models is an intricate endeavor that demands significant resources, and Anthropic is uniquely positioned to execute this vision.

The idea that Anthropic needs to raise billions for effective safety research may raise questions, given the substantial risks associated with powerful AI. However, their distinct status as a public benefit corporation provides them with legal protection, shielding them from shareholders who primarily seek financial returns.

Moreover, Dario Amodei’s insights into the future of AI and Language Models (LLMs) depict a promising landscape. His vision for 2024 envisions substantial enhancements in LLMs, such as heightened accuracy, a deeper understanding of nuanced queries, and improved conversational fluency.

Amodei’s strategic perspective places a profound emphasis on recognizing the current limitations of Language Models, urging businesses to focus on their shortcomings, even if they exhibit success only 40% of the time.

These identified deficiencies serve as invaluable opportunities for significant improvement. Understanding the intricacies of context and the complex reasoning capabilities that these models currently lack is crucial.

Amodei encourages businesses to develop products with a clear focus on advancing natural language processing technology, fostering innovation.

In a remarkable display of candor and humility, Amodei openly acknowledges a past prediction that did not unfold as expected, with Language Models evolving into autonomous agents through Reinforcement Learning.

The industry’s trajectory shifted toward boosting computing power and enhancing neural network complexity, deviating from initial expectations.

Nevertheless, Amodei remains optimistic, emphasizing the industry’s resilience and capacity to adapt. Companies are now making substantial investments in computing power and the intricacies of neural network structures, recognizing their pivotal role in unlocking new possibilities and overcoming challenges.

Amodei’s predictions extend beyond the consumer realm into the business landscape, where he anticipates companies harnessing these advancements.

By 2025-2026, this could mark a turning point in societal norms and expectations, as AI technology continues to evolve and redefine the way we interact with and benefit from this transformative technology.