AI Reads Your Mind: Getting Closer to the Plot of Minority Report

People have always loved thinking about the future, and science fiction is a great way to do that. But because it’s just made-up stories, some folks think the super cool tech in these stories will never actually happen in real life.

As we edge closer to a new era, it turns out some of the craziest ideas from movies and books are coming true, all thanks to science fiction. Believe it or not, things like video chatting, cell phones, tablets, drones, and robots might not be a thing if creative writers hadn’t dreamed them up first!

Remember the movie “Minority Report” which aired in 2002?

The year is 2054. Murders can now be stopped before they even occur, thanks to the sophisticated techniques of the PreCrime police unit, leveraging a mixture of advanced technology and the use of Precogs. The Precogs are three human beings with powers of precognition that allow them to visualize crimes before they happen.

It’s scary, but at the end of the day, it’s just something in a movie. The plot twist is in 2023, we have something like that in reality.

Semantic Decoder – Circumvent All Limitations to See the Day Light

A new artificial intelligence system called a semantic decoder can translate a person’s brain activity — while listening to a story or silently imagining telling a story — into a continuous stream of text.

The study, published in the journal Nature Neuroscience, was led by Jerry Tang, a doctoral student in computer science, and Alex Huth, an assistant professor of neuroscience and computer science at UT Austin.

Language decoders have been around for a while, but combining them with the latest advances in generative AI is a game-changer. In the past, these decoders could only pick up a few words or phrases, but Jerry Tang and his team have taken it to the next level. Their device can reconstruct ongoing language from spoken, thought, and even silent video inputs.

The system runs by analyzing non-invasive brain recordings through functional magnetic resonance imaging (fMRI). This decoder is built to reconstruct what a person perceives or imagines into continuous natural language.

Dr. Alexander Huth, the lead researcher, expressed, “We were kind of shocked that it works as well as it does. I’ve been working on this for 15 years, so it was shocking and exciting when it finally did work.”

What’s interesting in the research is the involvement of a generative AI tool. To make sure that the words decoded had proper English grammar and structure, the researchers integrated a generative neural network language model, or generative AI. To be more specific, they used GPT-1, an earlier version of the now-famous ChatGPT models.

This model can predict the next words in a sequence pretty well. But the challenge is, there are so many possible sequences that it’s not practical to check each one separately. That’s where a smart algorithm called Beam Search comes in handy.

This approach gradually narrows down possibilities, and the most promising options are kept for each step. By sticking with the most credible sequences, the decoder keeps improving its predictions and determines the most likely words as it goes along.

Let’s look at this example, considering the scenario where a person hears or thinks the word “Apple,” the decoder learns the corresponding brain activity. When the person encounters a new word or sentence, the algorithm utilizes learned associations to generate a word sequence matching the language stimulus’s meaning.

If a person hears or thinks “I ate an apple,” the algorithm may generate “I consumed a fruit” or “an apple was in my mouth.” The generated word sequence may not be an exact replica, but it captures the semantic representation.

Yes, by leveraging a clever encoding system, generative AI, and an intelligent search algorithm, the scientists managed to overcome the limitations of invasive fMRI.

After overcoming the challenges, the researchers were ready to test the system’s capabilities. They trained decoders for three individuals and evaluated each person’s decoder by analyzing brain responses while listening to two new stories. The objective was to see if the decoder could grasp the meaning of the information, and the results were truly remarkable.

The decoded word sequences not only captured the meaning of the information but often replicated the exact words and phrases.

Taking a step further, the researchers examined their system’s performance on imagined speech, a key aspect for interpreting silent speech in the brain. Participants were asked to imagine stories while undergoing fMRI scans, and the decoder effectively identified the content and meaning of their imagined narratives.

Researchers said they were surprised that the decoders still worked even when the participants weren’t hearing spoken language.

Shailee Jain, a member of the team stated, “Even when somebody is watching a movie, the model can predict word descriptions of what they’re seeing.”

This study represents a significant stride in non-invasive brain-computer interfaces. However, it highlights the necessity of integrating motor features and participant feedback to refine the decoding process. But there’s more to the story. Meta has entered the scene, redefining the limits of what we once thought possible.

Meta Psychics – Translate Brain Activity into Vivid Pictures

On October 18th, 2023, Meta announced an important milestone in the pursuit of “mind reading.”

Instead of leveraging fMRI, which has a resolution range of one data point every few seconds, Meta leaned hard on MEG technology, another non-invasive neuroimaging technique capable of taking thousands of brain activity measurements per second.

If you wonder how these MEG scans work, essentially these are methods where they measure the changes in the brain’s magnetic and electrical field. These scans allow them to track the timing of each brain activity to a precision of around 10 milliseconds.

During the MEG scans, people are usually given a task to do, and brain activity is measured while the person does that task. In addition, a special cap is also worn, which contains electrodes that measure the brain’s electrical activity.

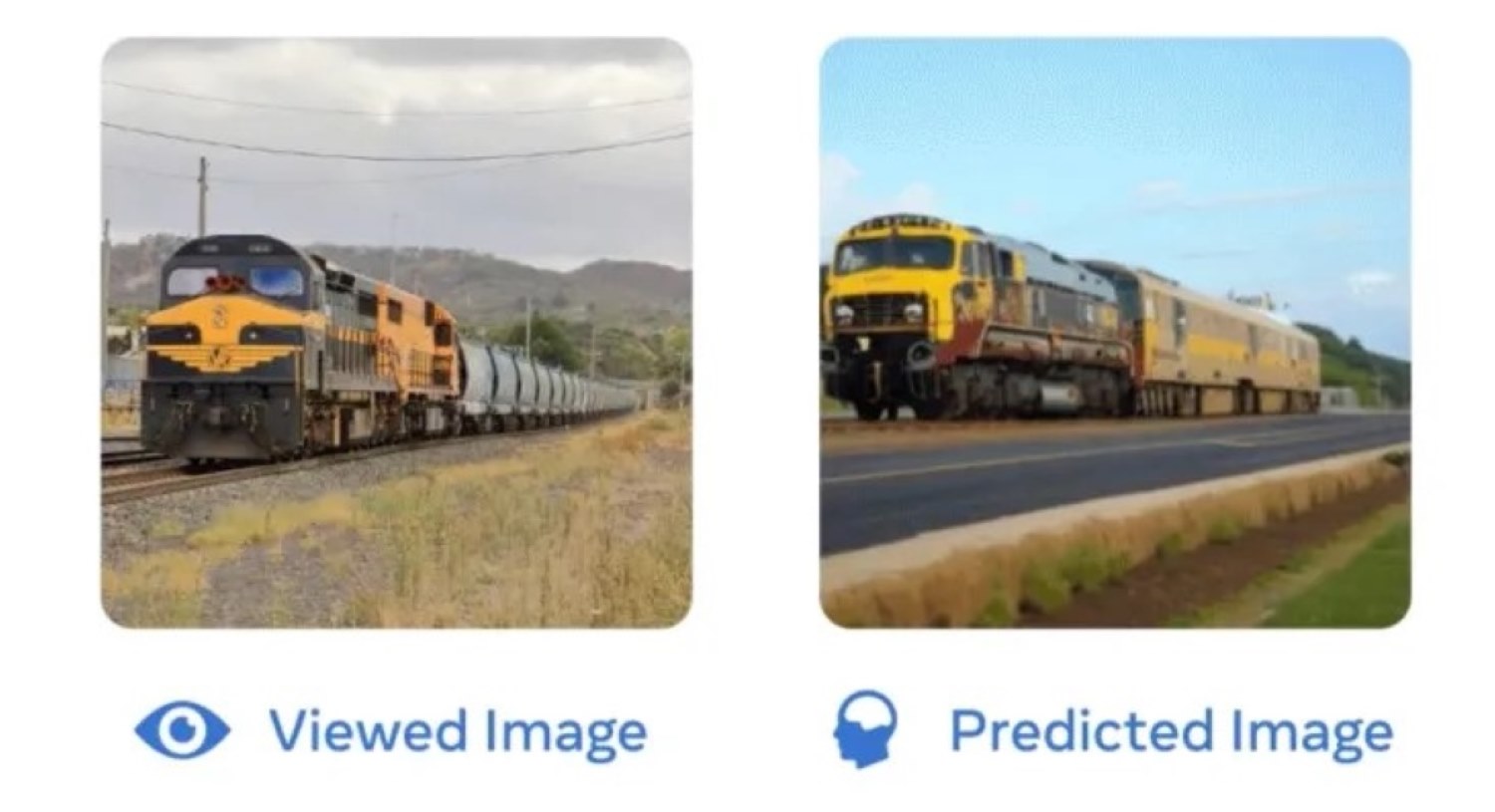

Using this technique, Meta managed to read the minds of people and interpret what they were looking at. Look at the picture below.

On the left is the original image shown to the participant, and on the right is what the AI thinks the person is looking at. Keep in mind, the image generated was solely based on brain waves; the AI had no clue about what the person was specifically looking at. The resulting images could accurately capture general object categories but struggled with finer details, showing some instability.

So, what is the difference between the fMRI scans in the previous paper and this one?

The Facebook Owner said that the functional alignment between such AI systems and the brain can then be used to guide the generation of an image similar to what the participant sees in the scanner.

Meta stated, “While our results show that images are better decoded with fMRI scans, our MEG decoder can be used at every instant of time and thus produce a continuous flux of images decoded from brain activity.” In simple terms, what Meta was trying to say is their approach gives immediate results, while the other method requires a bit more time.

And it’s true, we can notice that these images are much more accurate than the ones in the previous paper from the University of Texas.

Meta claimed that their research is ultimately aimed at guiding the development of AI systems designed to learn and reason like humans. But since the firm Meta were never known for prioritizing user privacy and consent, some people are reluctant.

Mind Reading – An Upcoming Catastrophe for Privacy?

Imagine a scenario where Meta or Google can know not only what you’re looking at but even what you’re thinking, all with the aim to better their advertising targeting or swaying public opinion in various ways.

But let’s take a step back and look at the problem in a with muscles relaxed.

It’s essential to grasp that undergoing this process involves entering one of those machines. It’s not a quick zap to the forehead. Naturally, you would have to provide your consent to be placed under the machine for them to scan your brain.

The researchers at the University of Texas also stressed that decoding was only effective with cooperative participants who willingly participated in training the decoder. When tested on untrained individuals or those who actively resisted by thinking other thoughts, the results were unintelligible and unusable.

Jerry Tang, one of the researchers, expressed the team’s commitment to addressing these concerns, saying: “We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that. We want to make sure people only use these types of technologies when they want to and that it helps them.”

So, the key point here is that this isn’t something particularly invasive in the sense that governments can easily understand everyone’s thoughts, which is a significant concern for many people.

There is a concern that if this technology becomes fully developed, perfected, and we manage to decode brain signals word for word or with extremely precise output using advanced algorithms and interpretations, which make our minds less private. However, it’s important to note that this isn’t the final destination of this technology, especially considering the vast possibilities it holds for the physically impaired.

Vast Potential – A Game Changer in Clinical Applications

One of the applications of the technology is to help those who are mentally conscious but unable to speak or communicate, let’s take a look at this case.

Keith Thomas, who was paralyzed from the chest down after a diving accident in July 2020, is able to move his hand again thanks to a cutting-edge clinical trial led by researchers from Northwell Health’s Feinstein Institutes for Medical Research in New York.

As explained by Northwell Health, this breakthrough was made possible with an innovative “double neural bypass” procedure. First, surgeons implanted microchips in Thomas’ brain in the regions that control movement and touch sensation in the hand.

The chip interfaces with AI algorithms that “re-link his brain to his body and spinal cord” interpreting Thomas’ thoughts and translating them into actions.

So far, the therapy seems to be working. Thomas is now able to lift his arms and can feel sensations on his skin, including the touch of his sister’s hand.

“It’s indescribable,” Thomas says, “to be able to feel something.”

To show how potential this technology can be in the clinical field, Nita Farahany has shared her thoughts on this advance on TED Talks, she is an author and distinguished professor and scholar on the ramifications of new technology on society, law, and ethics.

She stated, “If I had had DecNef available to me at the time, I might have overcome my PTSD more quickly without having to relive every sound, terror, and smell in order to do so. I’m not the only one. Sarah described herself as being at the end of her life, no longer in a life worth living, because of her severe and intractable depression. Then, using implanted brain sensors that reset her brain activity like a pacemaker for the brain, Sarah reclaimed her will to live.”

Nita continued, “While implanted neurotechnology advances have been extraordinary, it’s the everyday brain sensors that are embedded in our ordinary technology that I believe will impact the majority of our lives. Like the one third of adults and nearly one quarter of children who are living with epilepsy for whom conventional anti-seizure medications fail.”

Now, researchers from Israel to Spain have developed brain sensors using the power of AI in pattern recognition and consumer electroencephalography to enable the detection of epileptic seizures minutes to up to an hour before they occur, sending potentially life-saving alerts to a mobile device.

Regular use of brain sensors could even enable us to detect the earliest stages of the most aggressive forms of brain tumors, like glioblastoma, where early detection is crucial to saving lives.

“The same could hold true for Parkinson’s disease, Alzheimer’s, traumatic brain injury, ADHD, and even depression. We may even change our brains for the better,” she said.