The Next Note: AI’s Impact on Music Composition

The generative AI music market is projected to reach a valuation of $3 billion by 2028, according to a report commissioned by SACEM and GEMA. The report highlights that AI-driven music creation tools are becoming more sophisticated, allowing for higher quality and more diverse compositions, which in turn is driving market expansion.

The landscape of music creation is undergoing a profound transformation with the emergence of AI-powered platforms like Suno and Udio. These innovative tools are revolutionizing how music is composed, distributed, and consumed, ushering in a new era of creativity and accessibility.

Historical Context: The Evolution of AI in Music

The journey of making music with computers dates back to 1957 with Lejaren Hiller and Leonard Isaacson’s “The Illiac Suite,” the first computer-aided composition. The 1980s saw generative music efforts, notably David Cope’s Experiments in Musical Intelligence (EMI), which generated music in various composers’ styles.

In 1985, a computer accompaniment system used algorithms to generate complementary tracks based on live input. Even David Bowie ventured into AI with his 1990s tool, the Verbasizer, for lyrical writing. The modern AI boom began in 2012 with neural networks replacing hard-coded algorithms, and in 2016, Google’s Project Magenta generated an AI piano piece, marking a significant step forward.

Modern AI music generators like Google’s MusicLM, OpenAI’s Jukebox, Stable Audio, Adobe’s Project Music GenAI, and YouTube’s Dream Track attempted to generate music from text queries but faced limitations, often sounding rigid and lacking a human touch.

In 2017, musician Taryn Southern used modern AI for commercial music, finding it a revolutionary tool despite the manual effort required.

Fast forward to today, and the landscape of AI in music creation has undergone a remarkable transformation. Thanks to advancements in deep learning and cloud computing, new horizons have opened up for AI-driven music generation. The emergence of models like Generative Pre-trained Transformer 3 (GPT-3), developed by OpenAI, showcases the potential of AI to produce harmonically coherent pieces with minimal user input.

A noteworthy milestone in the legitimization of AI’s role in music creation is AIVA (Artificial Intelligence Virtual Artist). Recognized as a composer by France’s SACEM (Society of Authors, Composers, and Publishers of Music), AIVA signifies a significant turning point in acknowledging AI’s contribution to the music landscape.

And today, anyone can type a text prompt and create music thanks to platforms like Suno and Udio, simplifying the process and making high-quality music creation accessible to all.

The Future of Music: How AI Platforms Suno and Udio Are Changing the Game

Creating music has always been inherently complex, but advancements are making it more accessible and intuitive than ever before.

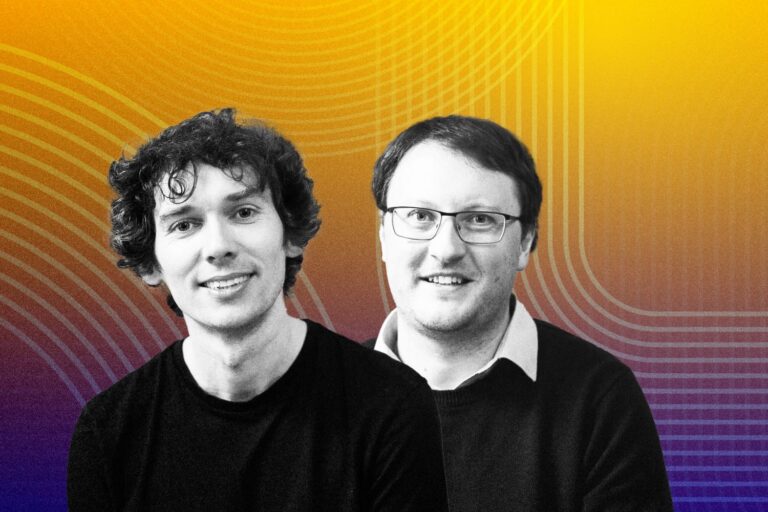

Suno – AI-Powered Music Creation

Suno’s co-founder Mikey Shulman aptly summarized this evolution: “Creating music is an inherently complex process but we’re streamlining that, making it more accessible and intuitive than ever before.”

Emerging from stealth mode in December of last year, Suno.ai swiftly gained attention by partnering with Microsoft, integrating its platform within the Copilot chatbot. This collaboration marked Suno.ai’s grand entrance into the spotlight, showcasing its innovative approach to music creation powered by AI.

What sets Suno.ai apart is its unique ability to generate fully-formed songs, complete with lyrics and vocals—an aspect that distinguishes it from other AI music tools like Google’s MusicFX or Meta’s AudioGen.

Udio: Setting the Pace in AI-Powered Music Production

Udio, also founded in late 2023, emerged from stealth mode recently.

Udio, just like Suno, enables anyone to create music without prior knowledge by simply typing a text prompt specifying subjects, instruments, feelings, or custom lyrics. In moments, a track is ready to play – Suno takes about two minutes, while Udio boasts a faster output of 30 seconds. Both offer features to extend tracks and provide different variations, and they are currently free to use.

Despite being newer, Udio’s first version has been praised as superior to Suno’s third version. Suno’s V3, available to everyone including free users, is touted as the best AI music generator yet. However, Udio’s launch has sparked comparisons to a “ChatGPT moment” for AI music, and some even call it the “Sora of Music.”

These comparisons, though often overhyped, hold some truth given the enthusiasm surrounding Udio. Udio’s advanced production techniques include side chaining, tape effects, and nuanced reverb and delay on vocals, with some outputs sounding incredibly real.

However, Udio has limitations: occasional errors, lack of post-output flexibility, low fidelity on some tracks, and struggles with specific genres like UK jungle.

Ethical Concerns in AI Music Generation

The ethical concerns surrounding AI-generated music, as exemplified by platforms like Udio and Suno, are multifaceted and raise significant questions about copyright infringement, artistic integrity, and the role of AI in creative expression.

The investigation into whether these systems were trained on copyrighted material, as indicated by the striking similarity between generated outputs and existing songs like ABBA’s “Dancing Queen,” underscores the potential legal implications of AI music generation.

While it’s possible to achieve similar results without directly incorporating copyrighted material into the training dataset, the resemblance is undeniable and raises concerns about intellectual property rights.

Furthermore, the acceptance of potential lawsuits from record labels by investors like Antonio Rodriguez suggests a recognition of the legal risks associated with AI-generated music.

Despite assurances from Suno AI about respecting artists’ work and engaging in discussions with major record labels, skepticism remains, especially in light of the recent open letter signed by over 200 artists urging AI developers and tech companies to cease using AI to infringe upon human artists’ rights.

The involvement of prominent artists like Billie Eilish and Metro Boomin in this call to action highlights the widespread concern within the music industry about the impact of AI on artistic integrity and the devaluation of human creativity. The future of the music industry is at a crossroads, with stakeholders grappling with the ethical implications of AI technology and the need to balance innovation with respect for artistic rights.

Ultimately, addressing these ethical concerns requires collaboration between AI developers, tech companies, record labels, artists, and regulatory bodies to establish clear guidelines and safeguards to protect the rights and interests of all parties involved.

Empowering Music with AI: Advancements and Potential Benefits

By harnessing advanced algorithms and machine learning techniques, AI is poised to revolutionize how musicians create, collaborate, and connect with audiences worldwide.

AI’s Role in Enhancing the Music Creation Process for Musicians

The technology behind AI music generation, such as LLMs (Large Language Models), involves complex algorithms trained on vast datasets of music to understand patterns and create outputs based on user input.

While LLMs like GPT generate text, these AI systems produce original songs and lyrics. Composing music is inherently more intricate than generating text due to numerous variables such as instrument tone, tempo, rhythm, sound design choices, volume balancing, compression, and EQ adjustments.

To achieve coherent and pleasing compositions, these systems must comprehend user preferences, genres, and specific sounds, synthesizing them in a harmonious manner. Audio synthesis, often cited as a crucial aspect of generative music, employs techniques like audio diffusion.

Simply put, audio diffusion involves manipulating noise within a signal until the desired output is achieved. This process is akin to image diffusion, where random noise is refined into a final image based on a prompt.

Deep learning, a subfield of machine learning, plays a pivotal role in the development of AI-powered music systems. By leveraging artificial neural networks with multiple layers – referred to as ‘deep’ networks – deep learning models can learn intricate patterns from vast datasets. The more data they are exposed to, the more accurate and nuanced their output becomes.

In music, deep learning models like WaveNet or Transformer are instrumental in creating high-fidelity audio. These models operate by generating raw audio waveforms and predicting subsequent sound samples, resulting in remarkably realistic and detailed musical compositions.

One of the most compelling aspects of deep learning in music is its ability to not only imitate existing music styles but also to innovate and create entirely new ones. These models excel at composing music with specific meta-features, such as emotional tone or genre characteristics, allowing for the generation of diverse and evocative musical pieces.

How AI Will Transform the Music Industry in General

Now, the deluge of AI-generated music adds another layer of complexity. Musicians who create stock music or royalty-free sounds face uncertain prospects, while others grapple with the impact of AI on their craft.

Renowned music figure Rick Beato highlights the enduring appeal of real instruments, asserting that AI-created music can’t replace the joy of playing music.

“People have too much fun playing real instruments, I’m not going to stop playing just because there’s AI guitar things,” Rick Beato said.

Yet, there’s recognition that AI has the potential to enhance certain aspects of music production, such as mastering and mixing.

The parallels to the rise of drum machines in the 1980s are evident, with musicians adapting to new technologies while preserving the essence of their craft.

Artificial intelligence is poised to revolutionize not only the composition of music but also how it is distributed and recommended to listeners. Music streaming platforms are already leveraging AI to suggest songs to users based on their listening habits, ushering in a new era of personalized music discovery.

Future trends in AI-driven recommendation algorithms are expected to enhance the listening experience even further, providing users with more personalized and immersive music recommendations.

By analyzing vast amounts of user data, AI algorithms can tailor recommendations to individual preferences, ensuring that listeners are introduced to new music that aligns closely with their tastes and interests.

AI is set to streamline the process of delivering music to different platforms and audiences, optimizing musicians’ outreach efforts.

The integration of AI into music education and training represents a significant advancement with far-reaching implications. By leveraging AI technology, music education can be personalized and optimized to meet the needs of individual learners, thereby democratizing access to musical learning experiences.

One of the most compelling aspects of AI-enhanced music education is its ability to provide real-time feedback and assessment. Traditional music education often relies on subjective evaluations from teachers, which can be limited by factors such as time constraints and individual biases.

However, AI-powered tools can objectively evaluate a student’s performance, providing instant feedback on aspects such as pitch accuracy, rhythm, and technique. This immediate feedback allows students to identify areas for improvement and track their progress over time, fostering a more efficient and effective learning process.

Moreover, AI can offer personalized learning experiences tailored to the unique needs and preferences of each student. By analyzing data on a student’s learning style, skill level, and musical interests, AI algorithms can generate customized lesson plans and exercises designed to maximize learning outcomes.

Perhaps most importantly, the use of AI in music education has the potential to break down barriers to access and participation. Traditional music education often requires access to specialized instructors, instruments, and resources, making it inaccessible to many individuals due to factors such as geography, time constraints, and financial resources.