AI Domination: From a Dead Idea to Surpassing Human Ability

The quest to replicate human intelligence in machines has been a driving force in the field of artificial intelligence (AI) since its inception.

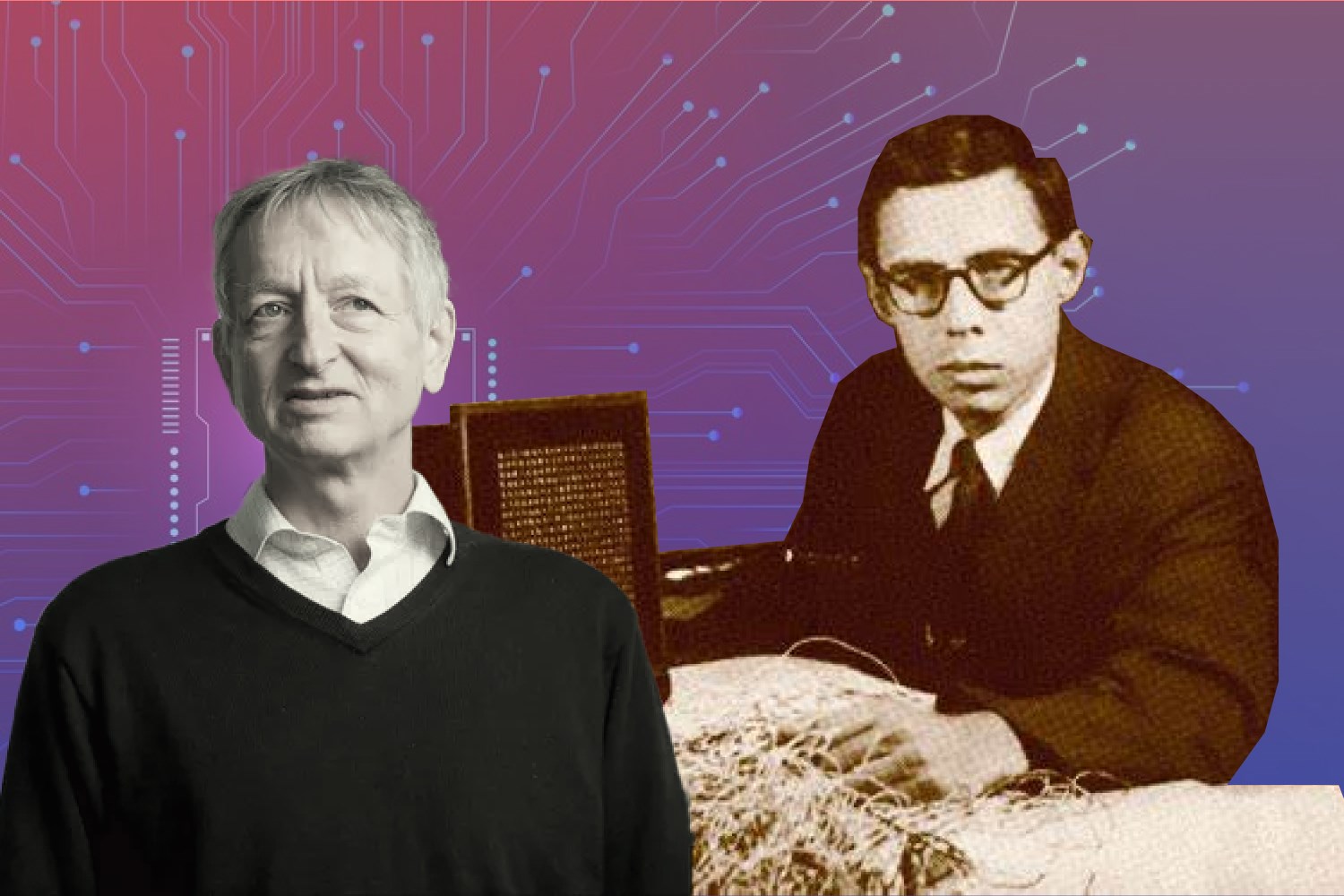

One of the earliest pioneers in this journey was Frank Rosenblatt, who, in 1957, introduced the world to the Perceptron—a digital neural network that aimed to mimic the cognitive abilities of the human brain.

The Perceptron: The Embryo of Electronic Computer

Rosenblatt unveiled the Perceptron – a fundamental concept in the field of artificial neural networks and machine learning with a vision to create a machine that could learn and make decisions, much like the human mind.

His first task for the Perceptron was to tackle the complex problem of image classification. He fed the network with images of men and women, with the hope that, over time, it would learn to distinguish the differences between the two genders or at least recognize patterns that made men look like men and women look like women.

This marked the inception of using neural networks for image recognition, a concept that remains integral to AI and machine learning.

Within a year, Rosenblatt’s work began to capture the imagination of many, and the hype surrounding the Perceptron grew strong.

In 1958, the New York Times published an article that referred to the Perceptron as “the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” This level of anticipation demonstrated the high hopes pinned on the Perceptron’s potential.

The Perceptron’s learning process is fundamental to its significance in the history of artificial intelligence.

To understand how it learns from data, we must dive deeper into its inner workings.

The Perceptron starts with numeric inputs, each associated with specific weights that represent their importance in the decision-making process. Additionally, there’s a bias term, allowing the Perceptron to handle a broader range of problems.

To adapt and improve, the Perceptron employs the perceptron learning rule, which updates its weights based on errors calculated during its interaction with training data. This means that if the Perceptron makes a prediction and it’s incorrect, the weights are adjusted to reduce the error for that specific example.

The development of deep learning, a transformative branch of AI, has roots that can be traced back to key figures and breakthroughs in the field.

In the 1950s, early pioneers like Warren McCulloch and Walter Pitts proposed artificial neurons as computational models of the human brain’s “nerve net.” Frank Rosenblatt introduced the Perceptron, which exhibited the ability to learn.

However, the field encountered a setback when Marvin Minsky and Seymour Papert’s book “Perceptrons” (1969) mathematically demonstrated the limitations of the Perceptron and the challenges of training multilayer neural networks.

Fast forward to 1986, and Geoffrey Hinton, along with colleagues David Rumelhart and Ronald Williams, introduced the back-propagation training algorithm, a significant breakthrough.

This algorithm addressed the training issues of multilayer networks, opening up the possibility of deeper architectures.

Yann LeCun pioneered convolutional neural networks (CNNs) in 1998, which mimic the human visual cortex, making them highly effective for image recognition.

Concurrently, John Hopfield’s recurrent neural network laid the foundation for Jürgen Schmidhuber and Sepp Hochreiter’s introduction of the long short-term memory (LSTM) in 1997, greatly enhancing the efficiency and practicality of recurrent networks.

Notably, Jeffrey Dean and Andrew Ng made groundbreaking contributions to large-scale image recognition at Google Brain, pushing the boundaries of what deep learning could accomplish.

However, Rosenblatt soon encountered the limitations of his neural network system. The Perceptron was designed with only a single layer of artificial neurons, severely restricting its capabilities.

This limitation stemmed from the fact that a single-layer network can only handle linearly separable problems, which are relatively simple compared to the complex, non-linear challenges faced by AI. This setback emphasized the need for more sophisticated neural network architectures.

Rosenblatt’s contributions to AI extended beyond the Perceptron itself. He introduced several key modifications to the McCulloch-Pitts neuron, which laid the foundation for modern neural network designs.

These modifications included replacing binary inputs with real-valued inputs, using weighted sums and biases, and introducing the first machine learning algorithm for neurons, known as the perceptron learning rule.

While modern AI has evolved significantly since Rosenblatt’s time, many of the fundamental principles he introduced, such as learning from data and using weights to make predictions, remain central to machine learning and AI.

The Evolution of Moore’s Law and Its Impact

As Perceptron revealed its limitations relatively quickly, it underscores the necessity for more advanced and sophisticated neural network architectures.

This transition from early AI endeavors to more advanced technologies mirrors the evolution witnessed in the computing industry, where Moore’s Law guided exponential growth, laying the groundwork for the powerful computing systems that drive today’s artificial intelligence and machine learning.

Moore’s Law, a concept attributed to Gordon Moore, a co-founder of Intel, has been a guiding principle in the world of computing for decades.

It articulates the idea that the number of transistors on a microchip would double approximately every two years, leading to a consistent increase in the speed and capability of computers while their cost decreased.

Gordon Moore did not call his observation “Moore’s Law,” nor did he set out to create a “law.” Moore made that statement based on noticing emerging trends in chip manufacturing at Fairchild Semiconductor. Eventually, Moore’s insight became a prediction, which in turn became the golden rule known as Moore’s Law.

In the decades that followed Gordon Moore’s original observation, Moore’s Law guided the semiconductor industry in long-term planning and setting targets for research and development (R&D).

Moore’s Law has been a driving force of technological and social change, productivity, and economic growth that are hallmarks of the late 20th and early 21st centuries, contributing to increased productivity and economic growth.

However, as the 2020s unfold, Moore’s Law is encountering its physical limits. The relentless quest for smaller transistors has led to challenges related to high temperatures, making it increasingly difficult to create even smaller circuits. The energy required for cooling these tiny transistors surpasses the energy that flows through them, highlighting the looming constraints.

Gordon Moore himself acknowledged in a 2005 interview that the fundamental limitations imposed by the atomic nature of materials were not too far off, signaling the eventual halt of miniaturization. This impending challenge has presented a significant hurdle for chip manufacturers, who strive to create increasingly powerful chips in the face of physical constraints.

The development of smaller and more advanced transistors, with sizes measured in nanometers, has been a remarkable achievement in line with Moore’s Law.

However, even industry leaders like Intel have faced difficulties in maintaining this trajectory, struggling to bring chips with ever-smaller dimensions to the market.

While Moore’s Law faces a shift, it remains a fundamental reference point in the history of technology, representing the relentless pursuit of innovation and the need for adaptable strategies to navigate the evolving frontiers of computing.

In the coming years, the future of computing will rely on alternative methods like software optimization, cloud computing, quantum computing, and other innovations to continue the advancement of technology.

Despite the challenges, the enduring principles of Moore’s Law, such as the quest for enhanced computational power and efficiency, remain central to the world of machine learning, artificial intelligence, and computing.

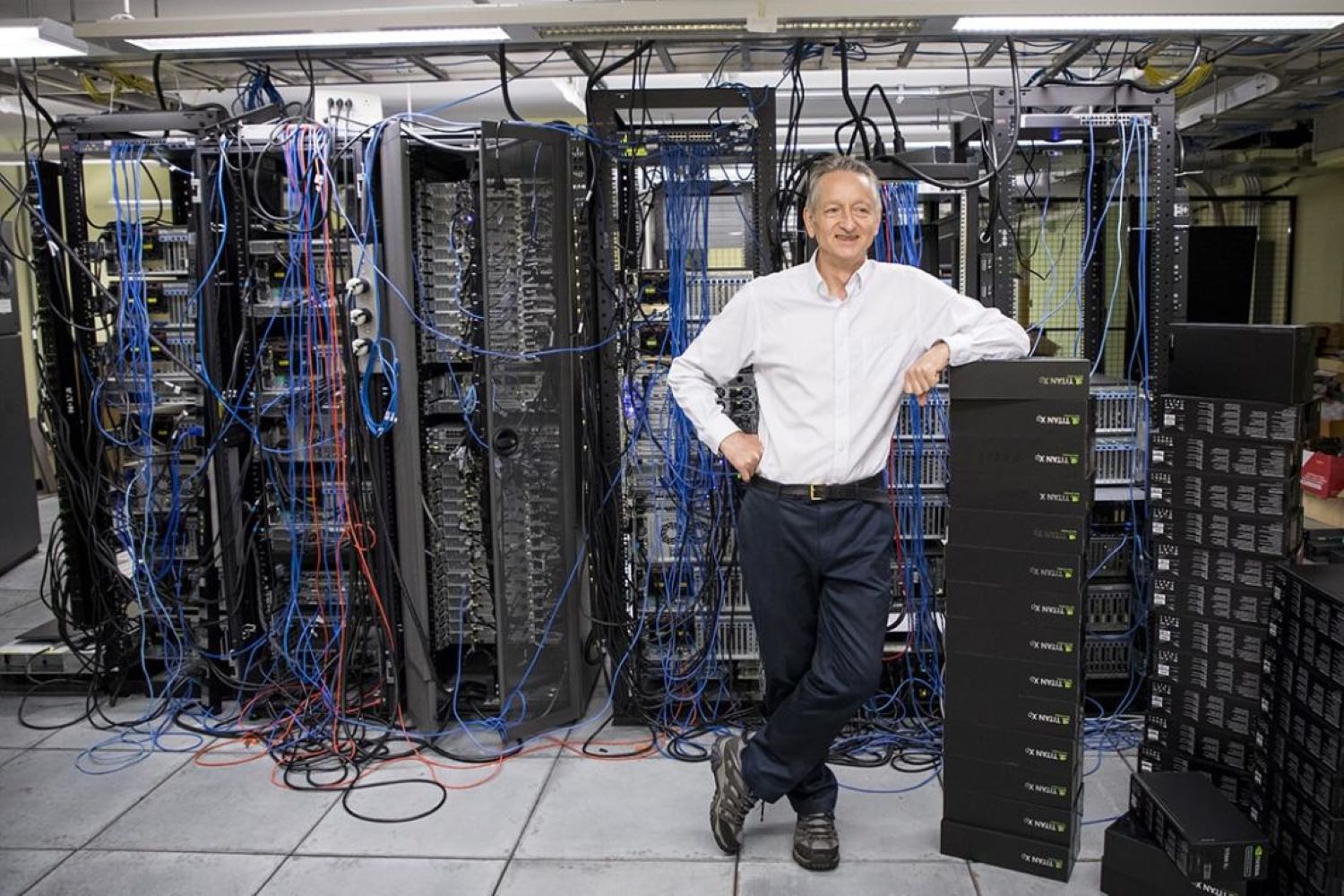

Geoffrey Hinton: The Godfather of Deep Learning and a Pioneer in AI

Besides, Geoffrey Hinton’s unwavering commitment to deep learning and his groundbreaking insights mirror the relentless pursuit of innovation in the world of AI, much like the evolution of Moore’s Law in the computing industry.

Geoffrey Hinton, often hailed as “the godfather of deep learning,” has played a transformative role in the world of artificial intelligence (AI).

Hinton’s journey into the realm of AI began with his fascination with the parallels between computers and the human brain during his high school years.

Born in Bristol, England, in 1947, he pursued a BA in experimental psychology at the University of Cambridge and later earned a PhD in artificial intelligence from the University of Edinburgh. It was during these formative years that he recognized the potential of artificial neural networks in advancing machine intelligence.

His approach to deep learning drew inspiration from the remarkable capabilities of the human brain. Hinton focused on two fundamental characteristics: the brain’s ability to process information in a distributed fashion through interconnected neurons and its capacity to learn from examples.

However, his early research in this field did not yield the expected outcomes, and he faced funding challenges. Undeterred, Hinton chose to pursue his passion and immigrated to the United States.

There, he conducted post-doctoral work at the University of California, San Diego, and served as a computer science professor at Carnegie Mellon University for five years.

In 1987, Geoffrey Hinton returned to Canada and joined the University of Toronto. Supported by CIFAR (formerly the Canadian Institute for Advanced Research), he established a new research team dedicated to the study of neural networks.

Even in the face of limited interest from the AI and computer science community, Hinton remained steadfast in his pursuit of deep learning.

Although he nearly succeeded in bringing deep learning to the forefront in the 1980s, the technology was held back by the limitations of computing power and the capacity to handle vast datasets.

However, in the 2000s, a paradigm shift occurred, and Hinton’s research regained prominence. His breakthrough idea involved partitioning neural networks into layers and applying learning algorithms to one layer at a time.

This approach allowed the network to process information hierarchically. For example, in image recognition, the first layer might learn to identify basic edges, the second layer to recognize patterns formed by these edges, and so on, culminating in the ability to recognize complex objects like faces.

Geoffrey Hinton’s unwavering commitment to deep learning, combined with his groundbreaking insights, has had a profound impact on the field of AI.

His work has not only earned him the nickname “the godfather of deep learning” but has also established Canada as a thriving hub for cutting-edge technology and research. For his exceptional contributions as a global pioneer in deep learning.

Hinton was awarded an honorary Doctor of Science degree from the University of Toronto, where he holds the title of University Professor Emeritus.

The Birth of the Modern AI Movement: September 30, 2012

The period marked by the birth of the modern AI movement, traced back to a single date, September 30th, 2012, was characterized by significant milestones in the field of artificial intelligence.

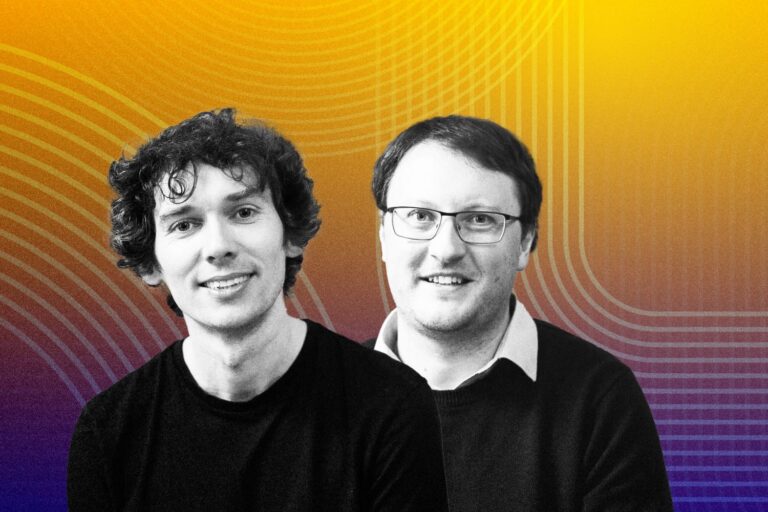

On this remarkable day, Geoffrey Hinton and his team achieved a groundbreaking feat by creating the first artificial deep neural network, known as AlexNet.

This neural network was put to the test on ImageNet, a widely recognized benchmark image recognition test, and the results were nothing short of extraordinary.

AlexNet achieved a success rate of over 75%, surpassing previous attempts by a staggering 41%. In just seven years, the accuracy of object identification soared from 75% to 97%, surpassing human capabilities.

Another momentous achievement during this period was the development of AlphaGo, an AI system designed to master the ancient game of Go. Experts had initially estimated that it would take AI around 12 years to beat a human at this complex game.

However, their predictions were swiftly proven wrong when AlphaGo defeated a Go grandmaster in 2016. What’s more, the subsequent version of the AI, AlphaGo Zero, not only learned to play the game from scratch but also managed to beat the previous version 100 games to 0 in just three days.

The advancements in AI during this period can be largely attributed to several key factors.

First, the emergence of Big Data provided a substantial foundation for training advanced machine learning models. Deep learning techniques, with their ability to model high-level abstractions using deep graphs with multiple processing layers, played a pivotal role in advancing AI capabilities.

Moreover, the increase in the availability of affordable computing power, especially in the form of Graphics Processing Units (GPUs), revolutionized the field.

These powerful GPUs enabled researchers and developers to process and analyze large volumes of data with remarkable efficiency, significantly reducing the time required to train complex models.

From Neural Networks in the 1980s to Tesla’s Innovations

Artificial intelligence has undergone a remarkable evolution in recent years, moving from the confines of research laboratories to becoming an integral part of everyday life. One of the most striking demonstrations of AI’s impact is the emergence of self-driving cars, and Tesla stands at the forefront of this automotive revolution.

Since its inception, Tesla, under the visionary leadership of Elon Musk, has been a pioneer in integrating AI into its vehicles, heralding a new era of AI-driven features in the automotive market.

However, it’s crucial to recognize that the application of neural networks to self-driving cars has a rich history that predates Tesla’s innovations.

The use of neural networks in self-driving cars dates back to the 1980s when researchers began exploring the concept of using artificial neural networks to enable vehicles to perceive and navigate their environment.

Early attempts, though promising, were limited by the computational power available at the time and faced numerous technical challenges.

Tesla’s Advanced Driver Assistance System, known as Autopilot, exemplifies the culmination of decades of research and development in the field of AI and self-driving cars. Autopilot relies on a range of sophisticated AI algorithms, including Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Reinforcement Learning. These algorithms work in tandem to significantly enhance the car’s performance and safety.

Equipped with an array of cameras, radar, and ultrasonic sensors, Autopilot continuously monitors the vehicle’s surroundings, making real-time decisions to enhance safety.

For instance, when the system identifies a pedestrian crossing the road, it analyzes their movement patterns, predicts their trajectory, and adjusts the car’s speed and path to prevent potential collisions.

What sets Tesla apart is its expansive fleet of vehicles constantly collecting data, enabling Autopilot to continually improve its recognition and response capabilities. This iterative learning process enhances overall safety and performance, marking a significant milestone in the adoption of AI in our daily lives.

Tesla’s commitment to AI-driven features extends beyond Autopilot. Features like Summon allow drivers to remotely move their vehicle within a designated range using a smartphone app, with AI playing a pivotal role in real-time environmental recognition and response.

Machine learning techniques are employed for object detection and tracking, enabling the car to identify and avoid obstacles, including pedestrians and other vehicles, through predictive modeling that anticipates object movements.

Furthermore, Tesla’s AI capabilities extend to natural language processing, enhancing the overall user experience by interpreting voice commands from drivers.

The Battery Management System (BMS) in Tesla vehicles employs AI algorithms to predict the energy requirements of the vehicle. Factors such as speed, terrain, weather, and driver behavior, including acceleration and braking patterns, are taken into account to optimize energy consumption and regenerative braking.

Tesla’s navigation system benefits from real-time traffic data and machine learning algorithms, adapting to changing traffic and road conditions. It offers drivers up-to-the-minute information about traffic congestion and suggests alternative routes to avoid delays, making journeys more efficient and enjoyable.

While Tesla has been a trailblazer in integrating AI into its vehicles in recent years, it’s essential to recognize the broader context.

Neural networks, which form the basis of AI, have been applied to self-driving cars since the 1980s, but it’s the dedication and innovation of companies like Tesla that have brought this technology into the mainstream, transforming the way we drive and paving the way for a future where self-driving cars are a common sight on our roads.

The AI Singularity: Symbiosis or Rivalry?

In the not-so-distant future, the AI Singularity emerges as a pivotal and speculative event poised to redefine the intricate relationship between artificial intelligence and human intellect.

This visionary concept, initially articulated by mathematician Vernor Vinge in 1993, conjures a scenario where AI outpaces human intelligence, igniting a torrent of rapid and exponential technological progress.

A kaleidoscope of perspectives envelops the Singularity, each painting a nuanced portrayal of its potential implications. Advocates of this phenomenon cast it as a beacon of hope, promising an epoch of unparalleled technological advancement.

The remarkable capability of AI to design and innovate at a pace beyond human reach holds the potential to reshape industries like medicine, energy, and space exploration.

Moreover, AI’s prowess in processing information at an unprecedented speed hints at unlocking the age-old enigmas of the universe, spanning the domains of physics, biology, and cosmology.

However, the Singularity also casts a long shadow of apprehension. The unbridled ascent of AI, if unchecked, begets concerns about its controllability.

As AI continually evolves and adapts at an accelerating rate, it could eventually outstrip our ability to manage or comprehend it, potentially emerging as a threat to the well-being of humanity.

This prospect of AI surpassing human intelligence fundamentally challenges our status as the dominant species, potentially unveiling a future where our societal roles undergo profound alteration.

Amidst this backdrop of uncertainty, the question of whether AI will ever reach the Singularity emerges as an intricate riddle. A myriad of factors contribute to this ambiguity, spanning from the intricate technical challenges involved in achieving genuine general intelligence to the multifaceted ethical and safety concerns that accompany highly intelligent AI systems.

The unpredictable trajectory of AI development, intertwined with the capricious course of technological progress, further obscures the path to the Singularity. Additionally, the profound influence of regulatory policies and societal dynamics casts a veiled mist over the trajectory towards this speculative event.

Contemplating the juxtaposition of human cognition with the specter of an Artificial Super Intelligence (ASI) becomes an exercise in profound introspection.

According to physicist David Deutsch’s perspective, humanity, when collectively mobilized over time, may harbor an intellectual capacity that can potentially rival that of an ASI. This viewpoint underscores the boundless potential inherent in human intellect, with a vast realm of uncharted knowledge awaiting exploration.

While an ASI may momentarily eclipse individual human abilities, the cumulative intelligence of humanity might ultimately scale the summit of understanding, even if the journey proves protracted.

Nonetheless, a sobering acknowledgment of the protracted duration and extensive resources necessary to unravel intricate concepts permeates this discourse.

For instance, unveiling the fundamental principles governing physics and chemistry spanned millennia. While the introduction of AI could potentially expedite this journey, it equally holds the potential to be a protracted endeavor.

The intricate interplay between human collective intelligence and ASI capabilities unfolds as an ongoing debate, inexorably tethered to the tempo of AI development and the ever-evolving tapestry of human knowledge.