Hugging Face’s Epic Quest to Dominate the AI Kingdom

In the rapidly evolving field of natural language processing (NLP), Hugging Face has emerged as a prominent name, revolutionizing the way we approach language understanding and generation.

Since its founding in 2016, this innovative company has captured the attention and admiration of researchers, developers, and industry professionals alike. With its cutting-edge models, open-source libraries, and collaborative community, Hugging Face has become a driving force behind the advancement of NLP technologies.

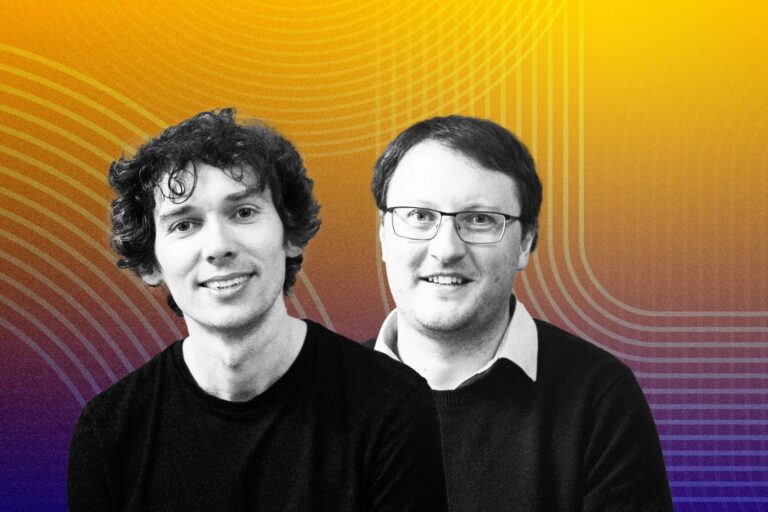

At the core of Hugging Face’s success lies a shared vision to democratize access to state-of-the-art NLP models and tools. The founders, Clément Delangue, Julien Chaumond, and Thomas Wolf, bring together a diverse range of expertise, combining business acumen, software engineering proficiency, and deep understanding of NLP techniques.

The Dynamic Trio Behind Hugging Face: A Symphony of Innovation in NLP

Starting with Clément Delangue, with a background in business and marketing, he has played a crucial role in establishing Hugging Face as a leading force in the industry.

After leaving Moodstocks in 2012, Clem Delangue ventured into various product and marketing roles at startups that were eventually acquired. However, by 2016, he felt a strong desire to create something of his own again.

In New York City, he reunited with Julien Chaumond, an esteemed mathematician and longtime professional acquaintance. Together, they decided to enroll in an online engineering course at Stanford University, forming a study group with a diverse array of individuals, including Thomas Wolf, a patent lawyer and musician.

Inspired by the limitations of existing conversational AI assistants like Siri and Alexa, the trio embarked on a challenging mission: to develop an open domain chatbot powered by natural language processing (NLP) that could engage in dynamic and enjoyable conversations on any topic.

Clément Delangue stated: “We started Hugging Face a bit more than four and a half years ago, because we’ve been obsessed with natural language processing … The field of machine learning that applies to text, and we’ve been lucky to create Hugging Face Transformers on GitHub that became the most popular open-source NLP library, that over 5,000 companies are using now to do any sort of NLP, right? Information extraction, right? If you’ve a text, you want to extract information.”

He added: “And we’ve been lucky to see adoption not only from companies, but also from scientists which have been using our platform to share their models with the world, test models of other scientists. We have almost 10,000 models that have been shared, and almost 1,000 datasets that have been shared on the platform to kind of help scientists and practitioners build better NLP models and use that in the product or in their workflows.”

As for Julien Chaumond, an experienced software engineer, has been instrumental in developing the core technologies and infrastructure that underpin Hugging Face’s tools and platforms.

His technical expertise is evident in the efficient implementation of Hugging Face’s open-source libraries, such as Transformers and Tokenizers, which have gained widespread adoption and have been cited by numerous research papers and industry projects.

Meanwhile, Thomas Wolf’s expertise as a researcher and data scientist specialized in NLP and deep learning has been pivotal in the development of Hugging Face’s state-of-the-art models.

With his contributions, the company has produced groundbreaking models like the popular BERT and GPT series, which have achieved top performance in various NLP benchmarks and competitions, solidifying Hugging Face’s reputation as an industry leader.

Hybrid Approach: Open-Source Extensibility with User-Friendly Interfaces

Hugging Face is dedicated to accelerating the progress of AI by providing a wide range of open-source tools, libraries, and resources specifically tailored for natural language processing (NLP) and machine learning. Their focus on NLP is driven by the understanding that language is a fundamental aspect of human communication, and unlocking its potential is crucial for advancing AI applications across various domains.

The global NLP market is expected to exhibit substantial growth over the next six years. It is projected to grow at a compound annual growth rate (CAGR) of 20%, increasing from $11 billion in 2020 to surpass $35 billion by 2026.

This growth will be fueled by various factors, including the availability of extensive datasets, growing interest from businesses in AI applications, advancements in AI research, and the continuous release of more robust language models with increased model parameters.

Central to Hugging Face’s mission is the goal of making AI accessible and usable for developers and researchers worldwide. They recognize that AI development can be complex and resource-intensive, often requiring deep technical knowledge and expertise.

To address this challenge, Hugging Face offers user-friendly tools and libraries that simplify the process of building, training, and deploying state-of-the-art NLP models. By providing accessible resources, Hugging Face enables a broader community of practitioners to leverage AI technologies, democratizing access to cutting-edge capabilities.

Clément Delangue emphasizes Hugging Face’s unique hybrid approach to technology development, combining the extensibility of open source with the practicality of user interfaces.

This approach ensures that users have the flexibility to leverage Hugging Face’s open-source libraries independently, providing them with a comprehensive toolkit for NLP tasks. By simply using the command “pip install transformers,” developers gain access to a rich collection of pre-trained models, tokenizers, and utilities, allowing them to build powerful NLP applications without the need for extensive manual implementation.

Furthermore, Delangue highlights the Hugging Face Model Hub, which serves as a platform for users to discover and explore a wide range of pre-trained models. This hub enables users to find models that suit their specific needs, whether they require language understanding, text generation, or specialized tasks like sentiment analysis or named entity recognition.

The Model Hub facilitates access to cutting-edge models developed by the Hugging Face community, thereby democratizing the use of state-of-the-art NLP techniques.

“We’ve been using open source for a while. We’ve always felt like in this field, you’re always standing on the shoulders of giants of other people on the fields before. We’ve been used to this culture of when you do science, you publish a research paper for research in machine learning, you even want to publish open source versus in the paper, right?” – he said.

It Takes Two to Tango: Hugging Face’s Leaning Hard to Collaborations

Hugging Face places a strong emphasis on collaboration and community engagement, creating a vibrant ecosystem where AI enthusiasts, researchers, and developers can come together. They actively foster collaboration through their open-source approach, encouraging knowledge sharing, idea exchange, and project collaboration within the community.

It’s the platform that allows users to contribute to open-source projects, submit code improvements, and participate in discussions. This collaborative environment enables the collective intelligence and expertise of the community to drive innovation and push the boundaries of AI development.

Evidence of Hugging Face’s collaboration efforts can be seen through their active GitHub repository and community forums.

On GitHub, Hugging Face hosts numerous open-source projects, including their widely used libraries like Transformers and Tokenizers.

The startup has a bunch of community forums, such as the Hugging Face Community, provide a space for users to ask questions, share ideas, and collaborate on projects.

In terms of research and development, Hugging Face maintains a strong commitment to staying at the forefront of the field. They continuously explore and create innovative technologies that address real-world challenges in NLP and machine learning.

One notable example is the success of Hugging Face’s Transformer models. These models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), have set new benchmarks in NLP tasks, highlighting the company’s dedication to pushing the boundaries of what is possible in AI.

These models have been widely adopted and cited in academic research papers, industry projects, and applications across various domains.

Additionally, Hugging Face actively engages with the research community through conferences, workshops, and collaborations. They regularly present their work and contribute to the advancement of the field through publications and presentations at major conferences like NeurIPS, ACL, and EMNLP.

Unstoppable Effort: Hugging Face Has Entered a Growth Tear

Hugging Face has captured the attention of investors with its groundbreaking advancements in natural language processing (NLP) and machine learning. The company’s ability to revolutionize the field has attracted significant funding, resulting in a total of $160.2 million raised across 5 funding rounds.

Their latest funding round, a Series C, took place on May 9, 2022, solidifying Hugging Face’s position as a frontrunner in the industry. This round saw the participation of notable investors such as Thirty Five Ventures and Sequoia Capital, demonstrating the growing confidence and support for Hugging Face’s vision and technologies.

With such substantial funding, Hugging Face has been able to expand its operations, invest in strategic partnerships, and fuel further innovation. The company’s post-money valuation, estimated to be between $1 billion to $10 billion as of May 2022, positions them as a unicorn in the AI landscape, garnering attention from both investors and industry experts.

In addition to raising capital, Hugging Face has also made strategic investments of its own. One notable investment took place on November 23, 2022, when Hugging Face invested in PhotoRoom as part of a Series A funding round. This investment, valued at an impressive $19 million, showcases Hugging Face’s commitment to supporting and nurturing promising ventures in the AI space.

Moreover, the company has demonstrated its growth through acquisitions, with two successful acquisitions to date. The most recent acquisition occurred on December 16, 2021, when Hugging Face acquired Gradio.

By strategically acquiring organizations like Gradio, Hugging Face bolsters its capabilities and expands its offerings, enabling them to provide even more innovative solutions to their users.

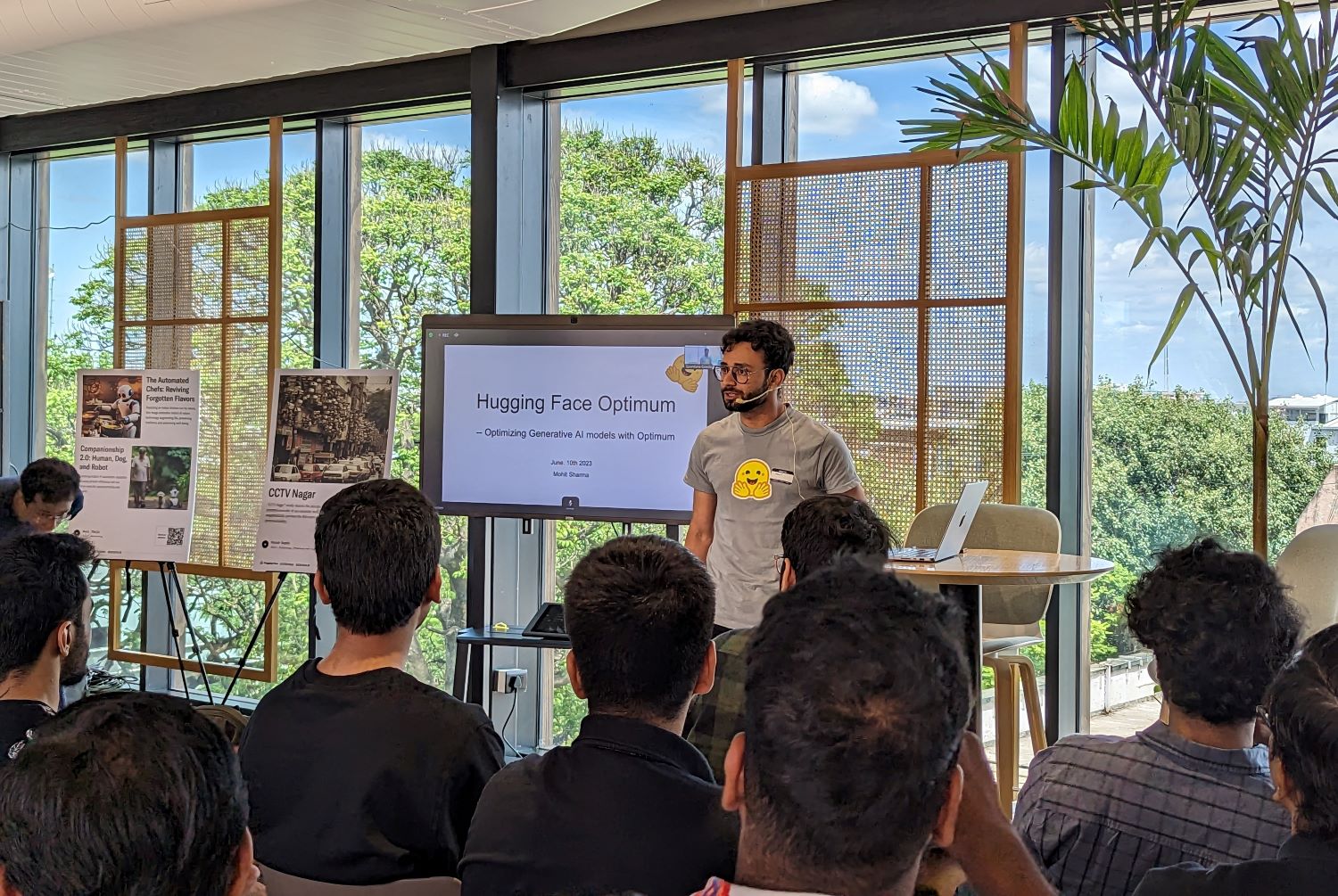

Besides collaborations and acquisitions, it’s important to set the focus on enhancing the technology to provide seamless experiences to the end user. That’s the driving force behind every product of Hugging Face, nobly, we can put BLOOM on the stage.

Unleashing BLOOM: Multilingual, Human-like Text Generator with Versatile Abilities

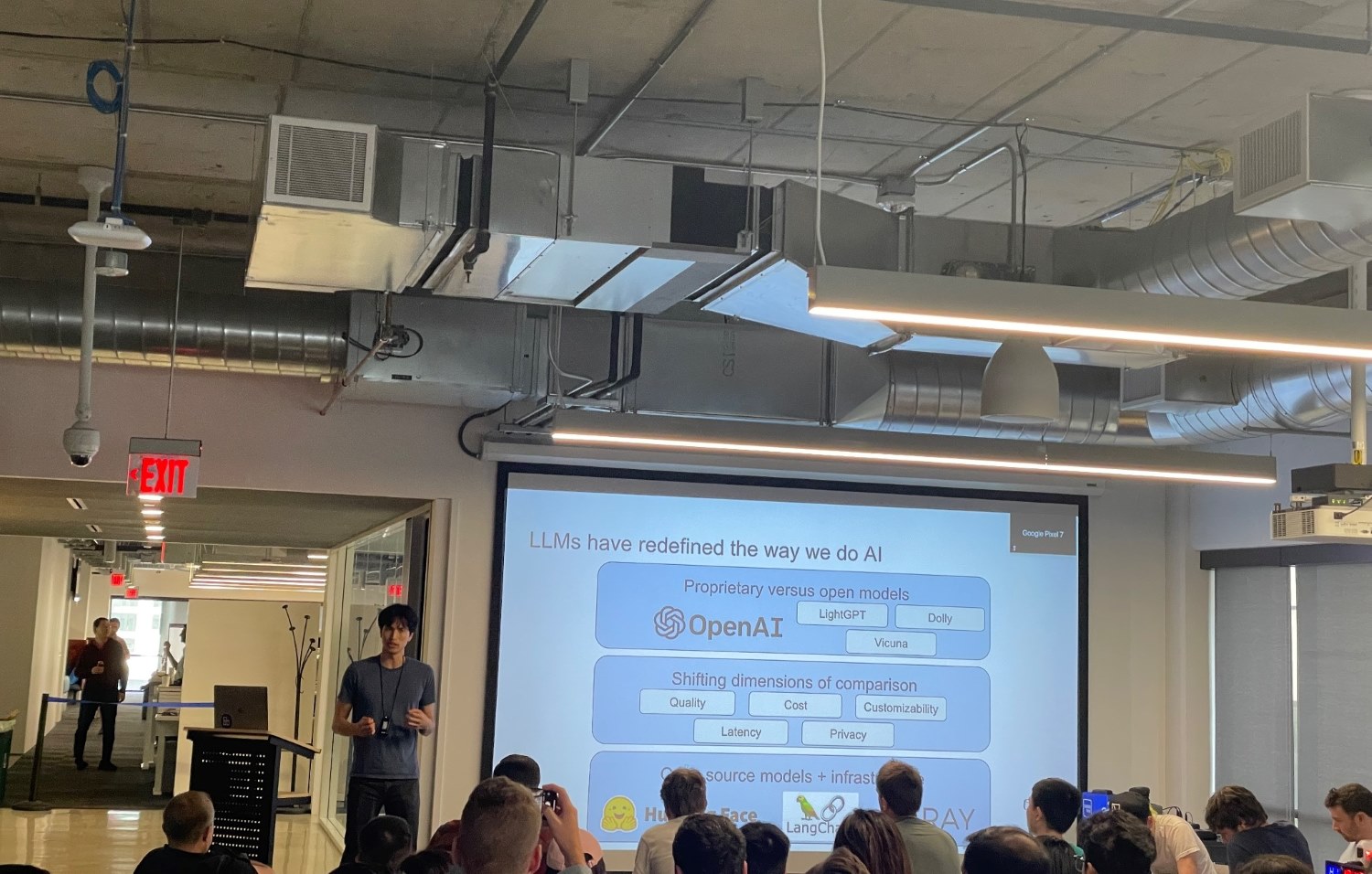

Taking a deeper dive into Hugging Face’s recent release of BLOOM, their proprietary large language model, we can see the strategic significance of this development for the company. With an architecture similar to OpenAI’s renowned GPT-3, BLOOM represents Hugging Face’s ambition to directly compete with other organizations that offer proprietary language models.

“GPT-3 is monolingual, and BLOOM was designed from the start to be multilingual so it was trained on several languages, and also to incorporate a significant amount of programming language data,” Teven Le Scao, research engineer at Hugging Face, told VentureBeat. “BLOOM supports 46 human languages and 13 programming languages — so that’s a very sizable difference.”

Historically, AI language models have primarily focused on English and Chinese languages. However, BLOOM aims to broaden the scope by offering new possibilities for French, Spanish, and Arabic speakers. This development addresses the need for open language models in these languages, which were previously unavailable or limited in availability.

Looking ahead, Hugging Face has identified the MLOps market as a key opportunity for further growth. MLOps encompasses model management, deployment, and monitoring, and it plays a crucial role in the lifecycle of machine learning models. Given Hugging Face’s expertise in natural language processing and their established customer base, expanding into the MLOps market is a natural progression for the company.

By leveraging their existing autoML solutions, which automate the machine learning model development process, Hugging Face can cross-sell MLOps products to their customers. This approach allows them to provide end-to-end solutions, covering the entire lifecycle of machine learning models. By entering the multi-billion-dollar MLOps market, Hugging Face can tap into a lucrative sector and further enhance their revenue streams.

The Bottom Lines

When being asked about the key advice for CEOs venturing into the digital innovation journey, Thomas Wolf passionately emphasized:

“CEOs just beginning the digitization journey should work closely with their dedicated IT team or technology partner to evaluate and ensure that their digital strategies match their organizations’ vision and culture…The leadership should also be adaptive—open to feedback and new ideas so that they can make necessary changes during the implementation and achieve the best possible result.”

Looking towards the future, Hugging Face is poised to continue its remarkable trajectory in the digital innovation landscape. The company’s adaptability ensures that Hugging Face remains agile and responsive to the evolving needs of its customers and the industry as a whole.